Times Square’s Luminescent News Tickers and Public Spaces

Posted on | January 1, 2018 | No Comments

Times Square has a storied history as one of the planet’s more recognizable public spaces. Its “zipper” is an electronic news display that has reported and reflected our collective response to news events. It helped establish Times Square as the place to be when major events happen, as we are reminded every New Years Eve.

Each December 31, crowds gather to celebrate the dawning of a new year (Except for pandemic-ridden 2020). Thousands line up, often in freezing cold weather, to see the famous crystal time ball drop. The ball was created by Walter F. Palmer as a way to celebrate the arrival of 1908 and was inspired by the Western Union Telegraph building’s downtown clock tower that dropped an iron ball each day at noon. Now millions participate with the Times Square crowd as television audiences from around the world tune into the final countdown.

Times Square is now flooded with variously evolved reader boards (Reuters, ABC, Morgan Stanley, etc.), all based on the original 1928 Times Tower electric ticker.

Evan Rudowski argues he was sending tweets long before Twitter emerged in 2007. In 1986, Rudowski got a job scanning the newswires like AP and UPI and entering short 80-character news briefs into what has been called the “Zipper”, a five feet high and 880 feet long electric display providing breaking news and stock prices to the passing crowds. He typed in the messages on an IBM PC and transmitted them from an office in Long Island to the famous ticker display at One Times Square in New York City.

In 1928 the New York Times encircled its signature building with an outdoor incandescent message display. In its November 1928 print edition, it headlined “HUGE TIMES SIGN WILL FLASH NEWS.” It explained, “Letters will move around Times Building telling of events in all parts of the world.”

The zipper’s first message announced the results of the 1928 Herbert Hoover – Al Smith presidential election. The crowd reaction was probably mixed as the loser Al Smith was from New York. On the other hand, he didn’t even carry the state in the electoral college.

Nevertheless, the zipper would reach out to the nation through major announcements such as the bombing of Pearl Harbor, the end of World War II on V-J Day, the assassination of President John F. Kennedy, and the release of 52 hostages that were held in Iran for 444 days.

The surrender by the Japanese drew one of the biggest crowds. On Aug. 14, 1945, the “zipper” flashed: “***OFFICIAL***TRUMAN ANNOUNCES JAPANESE SURRENDER” just after 7 pm that evening and the Times Square crowd exploded in celebration. A picture of a sailor kissing a young nurse he didn’t know by Photojournalist Alfred Eisenstaedt memorialized the event as it symbolized the relief of a dramatic war coming to its end.

The foot traffic in Times Square is extraordinary, with the daily number of pedestrians exceeding 700,000. It’s a mesmerizing and sometimes dizzying experience as you cross 42nd street on your way up Broadway. Hundreds of different displays provide a kaleidoscope of vivid imagery.

For a history of the stock ticker, see my post on Thomas Edison.

Notes

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Tags: stock ticker > ticker tape > Times Square > Times Square zipper > V-J Day

Auctioning Radio Spectrum for Mobile Services and Public Safety

Posted on | December 17, 2017 | No Comments

The breakup of AT&T in the 1980s created new opportunities for new companies to emerge and offer new services, including wireless or mobile telecommunications. Wireless systems lacked the spectrum or infrastructure for broadband and telcos were restricted by their “common carrier” status from carrying content at the time. Government policy needed to address the issue of supplying the public’s radio spectrum to private carriers to enhance the nation’s telecommunications abilities, its e-commerce or “m-commerce” opportunities as well as the ability to protect the public safety and respond to disasters.

Since the 1970s, the Federal Communications Commission (FCC) had segmented the spectrum to provide two analog cellular licenses in about 500 geographically separated areas. One was traditionally given to the Bell system (later to each of the Baby Bells) and another was awarded in each area by lottery. The second license was often subject to various speculation schemes, creating thousands of “cellionaires” and driving up the costs of mobile telephony. Additional costs were added by the high prices the Bell companies charged for interconnecting the wireless portion to the larger public-switched network.

In 1991, President Clinton signed the Emerging Telecommunications Technology Act to release radio spectrum to the private sector. Previously, cellular offered its services with only 50 MHz of spectrum. The Act allocated 200 MHz to be released over a 10-year period for a variety of wireless offerings such as PCS (Personal Communications Services). PCS emerged in recognition of the necessity to expand mobile services beyond car phones on roads and highways to a wider array of antennas that would serve more sophisticated services and extended mobility. A plan in Washington D.C. emerged to privatize parts of electromagnetic spectrum.[1]

The Omnibus Budget Reconciliation Act of 1993 had a rider that allowed the FCC to auction off the public’s radio spectrum. Exercising his privilege as Vice-President, Gore broke the Senate tie and sent it on to the President to be signed. The legislation, which was the first bill to balance the budget in 30 years, gave the FCC the authority to privatize the wireless spectrum. On July 25, 1994, the Federal Communications Commission commenced Auction No. 1,

netting some $617 million in winning bides by selling ten Narrowband Personal Communications Services (N-PCS) licenses used for paging services. The subsequent auction in March 1995 raised $7.7 billion for the US Treasury as companies like Air Touch and AT&T procured valuable licenses to provide wireless services. Consequently, wireless communications prices went down and services expanded.

Technological advances led to a new generation of (2G) digital wireless in 1990. But it was still offered over circuit-switched networks meaning that each conversation still needed its own channel and was slow to connect. TDMA (Time Division Multiple Access) was becoming the standard in the US while GSM (Global System for Mobile Communications) was used in Europe and PDC (Personal Digital Communications) was dominant in Japan and used packet-switching technologies.

Soon, 2.5G standards provided faster data speeds with the use of data packets, known as General Packet Radio Service (GPRS). The new standards provided 56-171 Kbps of service and allowed Short Message Service (SMS) and MMS (Multimedia Messaging Service) services as well as WAP (Wireless Application Protocol) for Internet access. An advanced form of GPRS called EDGE (Enhanced Data Rates for Global Evolution) was used for the first Apple mobile phone and considered the first version using 3G technology. For a history see my previous post on wireless generations.[3]

In 2008, the FCC auctioned licenses to use portions of the 700 MHz Band for commercial purposes. Mobile wireless service providers have since begun using this spectrum to offer mobile broadband services for smartphones, tablets, laptop computers, and other mobile devices. The electromagnetic characteristics of the 700 MHz Band gives it excellent propagation to penetrate buildings and obstructions easily and to cover larger geographic areas with less infrastructure (relative to frequencies in higher bands).

By July 1, 2009, the U.S. Government raised $52.6 billion in revenues by licensing electromagnetic spectrum.

More recently public auctions of spectrum have been used to support the development of First Net, a nation-wide network for public safety and disaster risk reduction. The FCC raised $7 billion in spectrum auction to fund the network at the 700 MHz. Concluded in January 2015, the spectrum auction raised nearly $45 billion.

Notes

[1] From “Administration NII Accomplishments,” http://www.ibiblio.org/nii/NII-Accomplishments.html. October 25, 2000.

[2] Hundt, R. (2000) You Say You Want a Revolution? A Story of Information Age Politics. New Haven: Yale University Press.

[3] Pennings, A. (2015, April 17). Diffusion and the Five Characteristics of Innovation Adoption. Retrieved from https://apennings.com/characteristics-of-digital-media/diffusion-and-the-five-characteristics-of-innovation-adoption/

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

The Landsat Legacy

Posted on | November 3, 2017 | No Comments

“It was the granddaddy of them all, as far as starting the trend of repetitive, calibrated observations of the Earth at a spatial resolution where one can detect man’s interaction with the environment.” – Dr. Darrel Williams, Landsat 7 Project Scientist

The Landsat satellite program is the longest-running program for sensing, acquiring, and archiving of satellite-based images of Earth. Since the early 1970s, Landsat satellites have constantly circled the Earth, taking pictures and collecting “spectral information” and storing them for scientific and emergency management services. These images serve a wide variety of uses, from gauging global agricultural production to monitoring the risks of natural disasters by organizations like the UNISDR. Landsat-7 and Landsat-8 are the current workhorses providing remote sensing services.[1]

The Landsat legacy began in the midst of the “space race,” when William Pecora, the director of the U.S. Geological Survey (USGS), proposed the idea of using satellites to gather information about the Earth and its natural resources. It was 1965, and the U.S. was engaged in a highly charged Cold War with the Communist world and space was seen as a strategic arena. Extensive resources had been applied to gathering satellite imagery for espionage, reconnaissance and surveillance purposes. Some of the technology was also being shared with NASA. Pecora stated that the program was conceived… “largely as a direct result of the demonstrated utility of the Mercury and Gemini orbital photography to Earth resource studies.” A remote sensing satellite program to gather facts about the natural resources of our planet was beginning to make sense.

In 1966, the USGS and the Department of the Interior (DOI) began to work with each other to produce an Earth-observing satellite program. They faced a number of obstacles including budget problems due to the increasing costs of the war in Vietnam. But they persevered, and on July 23, 1972, the Earth Resources Technology Satellite (ERTS) was launched. It was soon called Landsat 1, the first of the series of satellites launched to observe and study the Earth’s landmasses. It carried a set of cameras built for remote sensing by the Radio Corporation of America (RCA).

The Return Beam Vidicon (RBV) system consisted of three independent cameras that sensed different spectral wavelengths. They could obtain visible and near-infrared (IR) photographic images of the earth. RBV data was processed to 70 millimeter (mm) black and white film rolls by NASA’s Goddard Space Flight Center and then analyzed and archived by the U.S. Geological Survey (USGS) Earth Resources Observation and Science (EROS) Center.

The second device on Landsat-1 was the Multispectral Scanner (MSS), built by the Hughes Aircraft Company. The first five Landsats provided radiometric images of the Earth through the ability to distinguish very slight differences in energy. The MSS sensor responded to Earth-reflected sunlight in four spectral bands.

This video discusses remote sensing imagery, how it is created, and variations in resolution.

Landsat’s critical role became one of monitoring, analyzing, and managing the earth resources needed for sustainable human environments. Landsat uses a passive approach, measuring light and other energy reflected or emitted from the earth. Much of this light is scattered by the atmosphere, but techniques have been developed to dramatically improve image quality.

After 45 years of operation, Landsat now manages and provides the largest archive of remotely sensed – current and historical – land data in the world. A partnership between NASA and the U.S. Geological Survey (USGS), Landsat’s critical role has been monitoring, analyzing, and managing the earth resources needed for sustainable human environments. It manages and provides the largest archive of remotely sensed – current and historical – land data in the world.

Landsat uses a passive approach, measuring light and other energy reflected or emitted from the Earth. Much of this light is scattered by the atmosphere, but techniques have been developed for the Landsat space vehicles to dramatically improve image quality. The following video discusses the Landsat programs in some depth and how researchers can use their data.

Each day, Landsat-8 adds another 700 high-resolution images to the extraordinary database, giving researchers the capability to assess changes in Earth’s landscape over time. Landsat-9 will have even more sophisticated technologies when it is launched into space in 2020.

Recently, major disasters due to hurricanes in the southern US states and the Caribbean have been monitored by the Landsat satellites. The year 2017 will be noted for major disasters including Hurricane Maria in Puerto Rico, hurricane Irma in the Caribbean, and Hurricane Harvey and associated flooding in Texas.[2]

Notes

[1] Landsat 8 was launched in 2013 and images the entire Earth every 16 days. It is the eighth Landsat to be launched although Landsat 6 crashed into the Indian ocean during liftoff in October 1993.

[2] USGS has released a recent report on all 4 major hurricanes of 2017 that adversely affected the southern US.

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Russian Interference, Viral Sharing, and Friends Lying to Friends on Social Media in the 2016 Elections

Posted on | October 5, 2017 | Comments Off on Russian Interference, Viral Sharing, and Friends Lying to Friends on Social Media in the 2016 Elections

As discussed previously, social media is now a central part of modern democracies and their election processes. This was touted in the Obama presidential election in 2008 but became even more evident in the 2016 U.S. election, notably for unexpected 304-232 electoral college victory by Donald Trump. The real estate magnate and reality show TV star occupied the White House in January 2017, despite 2.8 million more US citizens voting for Hillary Clinton.

In this post, I look at some of the influence by Russia and other foreign entities on the recent election as investigated and reported on by the Mueller Special Counsel’s Report on the Investigation into Russian Interference in the 2016 Presidential Election. My main concern, however, is the viral spreading of “memes” on social media by compliant voters who fail to read or make critical distinctions about the posts and articles they share with their “friends.” These collaborators contribute to the spreading of “fake news” from organizations like the Internet Research Agency (IRA), the notorious Russian “troll farm.” Emotionally disturbing messages emerged from both political polarities though and were meant to manipulate the behaviors and emotions of unsuspecting people on various social media.

It is likely that Congress and the press, as well as the public, will be parsing this phenomenon over the next couple of months, if not years, as we all strive to understand where democracy is heading in the age of social media in politics.

In early September 2017, Facebook released results of a preliminary investigation looking into possible Russian interference in the 2016 U.S. election. They announced that approximately US$100,000 in ad spending were associated with Russian profiles during the period from June 2015 to May 2017. They later announced to Congress that over 3,000 Facebook ads were believed to be from Russian sources. How powerful were these ads? How were they aided by overzealous Sanders’ supporters? Were shares by Trump supporters significant in influencing independent and GOP voters?

Did Russian operatives and agents carefully target the supporters of Bernie Sanders, Jill Stein, and Donald Trump with emotionally and politically charged ads? And how many of the targeted citizens “shared” Russian produced propaganda over Facebook and other social media to their friends and others on social media? The controversy has positioned Mark Zuckerberg, the guy in the brown tee shirt below, and CEO of the $500 billion social media giant, as possibly the most important person in the world when it comes to the future of global democracy.

Zuckerberg is being proactive as some lawmakers are proposing to regulate social media by requiring all major digital media platforms with 1,000,000 or more users to monitor and keep public records of ad buys of more than $10,000. These digital platforms, as well as broadcast, cable and satellite providers, would also have to make reasonable efforts to ensure that ads and other electioneering communications are not purchased by foreign nationals, either directly or indirectly.

Some major questions to be addressed when it comes to the election interference issue are:

Who was providing the demographic data for targeting specific potential voters on the US side? We know that key swing states, Michigan and Wisconsin were targeted and Trump carried Michigan by 10,700 votes and Wisconsin by 22,748 votes. These were extremely narrow margins. Add Pennsylvania’s 44,000 voter margin, and the election goes to Trump by a mere 78,000 majority.

What keywords were they using to identify susceptible targets? For example, “Jew hater” and the N-word have actually been used to target specific audiences. Do they use specific words, phrases, or images that incite hate or a sense of unfairness? The Black Lives movement has been a particularly important target as Google’s investigation of foreign intervention found.

What foreign entities were involved and how extensive were the social media ad buys? What were they saying and what kind of images are being produced? Who was producing and designing the manufactured and posted “memes”? Memes are designed communications that combine provocative imagery with text that hooks the viewer. These captioned photos are shared easily on social media and are also associated with stories on websites. PropOrNot’s monitoring report identified more than 200 websites as routine peddlers of Russian propaganda during the 2016 election season. They had an audience of over 15 million Americans. PropOrNot estimates that stories planted or promoted on Facebook were viewed more than 213 million times.[1]

Even more significant are the viral metrics that characterize social media. Who was sharing this information and how dynamic was the virality and network effects? In other words, how fast and wide were the messages being spread from individual to individual? This is similar to word of mouth (WOM) in the nonmedia world, and it is highly prized because it shows active and emotional involvement. What can create a “snowball effect” that multiplies your post reach to friends of friends and beyond. With 2 billion active users a month, Facebook is clearly a concern as are other platforms such as Twitter, Instagram, and Snapchat.

An interesting case dealt with a fake news story about paid protesters being bused to an Anti-Trump demonstration in Austin, Texas. That story started on Twitter with hashtags #fakeprotests and #trump2016 and was quickly shared some 16,000 times and also migrated to Facebook where it was shared more than 350,000 times.

So we are entering into an age when social media metrics are not just crucial for modern advertising and e-commerce but essential for political communication as well. We will see an increasing need for skilled digital media analysts that measure social media metrics ranging from simple counting measures of actions like check-ins, click-through rates, likes, impressions, numbers of followers, visits, etc. to other important contextual metrics including conversation volume, engagement, sentiment ratios, conversion rates, end action rates, and brand perception lifts.[3]

Countries interfering in the elections of other countries is not a new phenomenon. But rarely have they been able to enlist so many unwitting collaborators. As we continue to move into the era of cybernetic democracy, a new vigilance is required at many levels. National and local governments need to be wary, social media platforms like Facebook need to review content and sources, and users also need to take responsibility for reading what is being passed around and using ethics-based judgment before spreading rumors and materials that incite hate and social discord.

Notes

[1] PropOrNot’s monitoring report investigates social media stories planted or promoted by the disinformation campaigns.

[2] ThinkProgress recently began a series investigating foreign influence on social media. Founded in 2005, ThinkProgress is funded by the Center for American Progress Action and covers the connections and interactions between politics, policy, culture, and social justice.

[3] I got my start in teaching social media metrics with John Lovett’s (2011) Social Media Metrics Secrets John Wiley and Sons. It is still highly relevant.

© ALL RIGHTS RESERVED

Anthony J. Pennings, Ph.D. is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. From 2002-2012 was on the faculty of New York University. Previously, he taught at Hannam University in South Korea, Marist College in New York, Victoria University in New Zealand, and St. Edwards University in Austin, Texas where he keeps his American home. He spent 6 years as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, Ph.D. is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. From 2002-2012 was on the faculty of New York University. Previously, he taught at Hannam University in South Korea, Marist College in New York, Victoria University in New Zealand, and St. Edwards University in Austin, Texas where he keeps his American home. He spent 6 years as a Fellow at the East-West Center in Honolulu, Hawaii.

Tags: 2016 U.S. election > cybernetic democracy > Russian interference > Social Media Metrics

Assessing Digital Payment Systems

Posted on | August 29, 2017 | Comments Off on Assessing Digital Payment Systems

In digitally-mediated environments, new forms of currency and payment systems continue to gain public acceptance. Changes in technology, contactless options, and the ease of electronic payments make cash less desirable. While credit cards remain the most popular form of payment with some 70% of the market, other systems are emerging. PayPal, for example, is used for some 15% of online payments.

The major types of payment systems for B2C transactions:

* Payment Cards – Credit, Charge, Debit

* e-Micropayments – less than $5

* Digital Wallets – AliPay, Apple Pay, Samsung Pay

* e-Checking – Representation of a check sent online

* Digital eCash

* Stored Value (Smart Cards, PayPal, Gift Cards, Debit

Cards)

* Net Bank – Digital payments directly from your bank

* Cryptocurrencies – Peer-to-peer blockchain enabled digital coins (Bitcoin, Ethereumm, Dash)

What factors determine the success of a digital payment system? Here is a list of some important criteria to consider when it comes to assessing the viability of a currency/payment system.

* Acceptability – Will merchants accept it?

* Anonymity – Identities and transactions remain private

* Independence – Doesn’t require extra hardware or software

* Interoperability – Works with existing systems

* Security – Reduced risks to payer and payee

* Durability – Not subject to physical damage

* Divisibility – Can be used for large as well as small purchases

* Ease of use – Is it easier to use than a credit card?

* Portability – Easy to carry around

* Uniformity – Bills, coins, and other unites are the same size and shape and value

* Limited supply – Currencies lose value if too abundant [1]

What about payment systems for large transactions between businesses or governments? The major types of payments systems for B2B, B2G or G2G transactions are:

* Electronic Funds Transfer – ACH, SWIFT, CHIPS

* Enterprise Invoice Presentment and Payment (EIPP)

* Wire Transfers – FEDWIRE

The Federal Reserve’s FEDWIRE transfers trillions of US dollars every day and is a major system for facilitating transactions between banks and other financial institutions.

Notes

[1] Types of payment systems and and criteria drawn from “Functions of Money – The Economic Lowdown Podcast Series, Episode 9.” Functions of Money, Economic Lowdown Podcasts | Education Resources | St. Louis Fed. N.p., n.d. Web. 15 July 2017.

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Tags: ACH > Alipay > CHIPS > Fedwire > PayPal > SWIFT

Digital Content Flow and Life Cycle: The Value Chain

Posted on | July 14, 2017 | No Comments

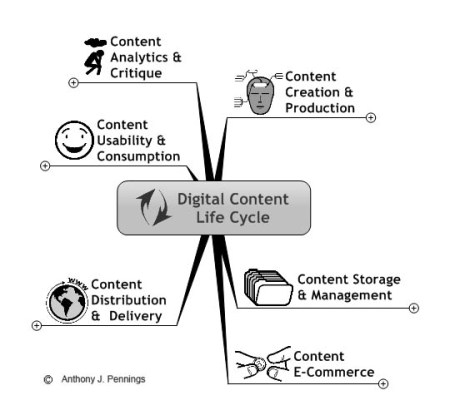

In this post, I connect the idea of the digital content life-cycle to the concept of a value chain. E-commerce and other digital media firms can use this process to compare and identify value-creating steps and prioritize them within the organization’s workflow. They also become important in anticipating and analyzing needed human competences and digital skill sets.

The graphic below represents the key steps in the digital media production cycle, starting at one o’clock.

Long recognized by economists as part of the commodity production process where raw materials are processed into a final product via a series of value-adding activities, the value chain concept was refined by Michael Porter in 1985.

The idea of the value chain is based on the view of organizational outcomes as a series of transformative steps. He focused on the processes of organizations, seeing productive activities as a series of sequential steps. Central to this idea is seeing a manufacturing (or service) organization as a system, made up of subsystems each with inputs, transformation processes, and outputs.

Porter distinguished “primary activities” in the value chain from “secondary activities” in the sense that the former were more directly related to a final output. Primary activities include inbound logistics, production/operations, outbound logistics, marketing and sales, and services.[1] Raw materials come into the company through logistical processes and pass through production facilities that are coordinated via operations. These resources are transformed into a final product that is marketed to customers.

Activities such as procurement, human resource management, technological development, and infrastructure are secondary only in the sense that they provide support functions to the primary value chain. Departments such as accounting, finance, general management, government relations, legal, planning, public affairs, and quality assurance are necessary, but not primary. Porter didn’t diminish these activities but rather placed them in relation to output goals.

Both primary and secondary activities can help identify value-creating steps, supporting activities and prioritize them within the organization’s workflow.

An analysis of the flow of digital content production can help companies determine the steps of web content globalization.

In the early days of the Internet, Rayport and Sviokla (1995) presented the idea of a virtual value chain in the Harvard Business Review. They listed five activities involving the “virtual world of information” that help generate competitive advantage. In their article they suggest that gathering, organizing, selecting, synthesizing, and distributing information help companies use content and value-added resources to build new products and services and enhance customer relationships.[2]

They even suggested that newspapers could use the five value-adding steps to construct new products with the digital photography and other information resources they have available. Remember this was a time when the jpeg image was fairly new and companies like Real Networks were competing to become a video standard. No Facebook existed.

A digital chain analysis involves diagramming then analyzing the various value-creating activities in the content production, storage, monetization, distribution, consumption, and evaluation process. Each step can be analyzed and evaluated in terms of its contribution to the final outputs.

Furthermore, it involves an analysis of “informating” process, the stream of data that is produced in the content life cycle process. Digital content passes through a series of value-adding steps that prepare it for global deployment via data distribution channels to HDTV, mobile devices, and websites. Each of these steps is meticulously recorded and made available for analysis.[3]

The digital value chain is presented linearly here, but most data activities that add value operate as cycles. New ideas are conceived, new content is added, questions are constantly being asked, new ways of monetizing content is also extremely important. Also, how are audiences consuming the content? This leads the process back to the initial conception of content with the consideration of audience/consumer analytics and feedback.

Through this analysis, the major value-creating steps of content production and marketing process can be systematically identified and managed. In the graph above, core steps in digital production and linkages between them are identified in the context of advanced digital technologies. These are general representations but provide a framework to analyze the activities and flow of content production. Identifying these processes helps to understand the equipment, logistics, and skill sets involved in modern media production and also processes that lead to waste.

In other posts I will discuss each of the following:

Content Creation and Production

Content Storage and Management

Content E-Commerce

Content Distribution and Delivery

Content Usability and Consumption

Content Analytics and Critique

Notes

[1] Porter, Michael E. Competitive Advantage. 1985, Ch. 1, pp 11-15. The Free Press. New York.

[2] A version of this article appeared in the November–December 1995 issue of the Harvard Business Review.

[3] Singh, Nitish. Localization Strategies for Global E-Business.

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Tags: digital content lifecycle > digital content production > Michael Porter > value chain

Not Like 1984: GUI and the Apple Mac

Posted on | May 27, 2017 | No Comments

In January of 1984, during the Super Bowl, America’s most popular sporting event, Apple announced the release of the Macintosh computer. It was with a commercial that was shown only once, causing a stir, and gaining millions of dollars in free publicity afterward. The TV ad was produced by Ridley Scott whose credits at the time included directing movies like Alien (1979) and Blade Runner (1982).

Scott drew on iconography from Fritz Lang’s Metropolis (1927) and George Orwell’s classic 1984 novel to produce a stunning dystopic metaphor of what life would be like under what was suggested as a monolithic IBM with a tinge of Microsoft. As human drones file into a techno-decrepit auditorium, they became transfixed by a giant “telescreen” filled with a close-up of a man, eerily reminiscent of an older Bill Gates. Intense eyes peer through wired rimmed glasses and glare down on the transfixed audience as lettered captions transcribe mind-numbing propaganda:

-

We are one people with one will, one resolve, one cause.

Our Enemies will talk themselves to death, and we will bury them with their confusion.

For we shall prevail.

However, from down a corridor, a brightly-lit female emerges. She runs into the theater and down the aisle. Finally, she winds up and throws an anvil, a large hammer, into the projected face. The televisual screen explodes, and the humans are startled out of their slumbered daze. The ad fades to white, and the screen lights up: “On January 24th, Apple Computer will introduce Macintosh. And you will see how 1984 won’t be like “1984.” The reason, of course, was what Steve Jobs called the “insanely great” new technology of the Macintosh unveiled by Apple. Against a black background, it ends with the famed logo, a rainbow striped Apple with a bite out of the right side.

By 1983, Apple had needed a new computer to compete with the IBM PC. Steve Jobs went to work, utilizing mouse and GUI (Graphical User Interface) technology developed at Xerox PARC in the late 1970s. In exchange for being allowed to buy 100,000 shares of Apple stock before the company went public, Xerox opened its R&D at PARC to Jobs.[17] Xerox was a multi-billion dollar company with a near monopoly on the copier needs of the Great Society’s great bureaucratic structures. In an attempt to leverage its position to dominate the “paperless office,” Xerox sponsored the research and development of a number of computer innovations, but the Xerox leaders never understood the potential of the technology developed under their roofs.

One of these innovations was a powerful but expensive microcomputer called the Alto that integrated many of the new interface technologies that would become standard on personal computers. The new GUI system had the mouse, networking capability, and even a laser printer. It combined a number of PARC innovations including bitmapped displays, hierarchical and pop-up menus, overlapped windows, tiled windows, scroll bars, push buttons, check boxes, cut/move/copy/delete and multiple fonts as well as text styles. Xerox didn’t know quite how to market the Alto, so it gave its microcomputer technology to Apple for an opportunity to buy the young company’s stock.

Apple took this technology and created the Lisa computer, an expensive but impressive prototype of the Macintosh. In 1983, the same year that Lotus officially released it’s Lotus 1-2-3 spreadsheet program — Apple released Lisa Calc, along with six other applications – LisaWrite, LisaList, LisaProject, LisaDraw, LisaPaint, and LisaTerminal. It was the first spreadsheet program to use a mouse, but at a price approaching $10,000, the Lisa proved less than economically feasible. But it did inspire Apple to develop the Macintosh and software companies such as Microsoft to begin to prepare software for the new style of computer.

The Apple Macintosh was based on the GUI, often called WIMP for its Windows, Icons, “Mouse,” and Pull-down menus. The Apple II and IBM PC were still based on something called a command line interface, a “black hole” next to a > prompt that required code to entered and executed on. This system required extensive prior knowledge and/or access to readily available technical documentation. The GUI on the other hand, allowed you to point to information already on the screen or categories that contained subsets of commands. Eventually, menu categories such as File, Edit, View, Tools, Help were standardized on the top of GUI screens.

A crucial issue for the Mac was good third-party software that could work in its GUI environment, especially a spreadsheet. Representatives from Jobs’ Macintosh team visited the fledgling companies that had previously supplied microcomputer software. Good software came from companies like Telos Software that produced the picture-oriented FileVision database and Living Videotext who sold an application called ThinkTank that created “dynamic outlines.” Smaller groupings, such as a collaboration by Jay Bolter, Michael Joyce, and John B. Smith created a program called Storyspace that was a hit with writers and English professors.

PARC was a research center supported by Xerox’s near monopoly on paper-based copying that grew tremendously with the growth of corporate, military and government bureaucracies during the 1960s. Interestingly, in 1958, IBM passed up an opportunity to buy a young company that had developed a new copying technology called “xerography.” The monopoly gave them the freedom to set up a relatively unencumbered research center to lead the company into the era of the “paperless office.” One of the outcomes of this research was the GUI technology.

Unfortunately for Xerox, they failed to capitalize on these new technologies and subsequently sold their technology in exchange for the right to buy millions of dollars in Apple stock. Jobs and Apple used the technology to design and market the Lisa computer with GUI technology and then during the 1984 Super Bowl, dramatically announced the Macintosh. The “Mac” was a breath of fresh air for consumers who were intimated by the “command-line” techno-philosophy of the IBM computer and its clones.

Notes

[17] Rose, Frank (1989) East of Eden: The End of Innocence at Apple Computer. NY: Viking Penguin Group. p.47.

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Tags: 512K “fat Mac” > Excel > Excel 2.2 for the Macintosh > Excel 5.0 > Great Society > GUI history > Lisa Calc > Lotus 1-2-3 > Microsoft’s Multiplan > Quattro Pro > Visual Basic Programming System > Windows 1.0 > Xerox PARC

A First Pre-VisiCalc Attempt at Electronic Spreadsheets

Posted on | May 25, 2017 | No Comments

Computerized spreadsheets were conceived in the early 1960s when Richard Mattessich at the University of California at Berkeley conceptualized the electronic simulation of business accounting techniques in his Simulation of the Firm through a Budget Computer Program (1964). Mattessich envisaged the use of “accounting matrices” to provide a rectangular array of bookkeeping figures that would help analyze a company through numerical modeling. Mattessich’s thinking would predate popular spreadsheet programs like VisiCalc, Lotus 1-2-3, Excel, and Google Sheets.

The development of the first actual computerized spreadsheet was based on an algebraic model written by Mattessich and developed by two of his assistants, Tom Schneider and Paul Zitlau. Together they developed a working prototype using Fortran IV programming language. It contained the basic ingredients for the electronic spreadsheet including the crucial support for individual figures in cells by entire calculative formulas behind each entry.[1]

Mattessich was sanguine on his invention, recognizing that it was a time when computers were being considered for a number of simulation projects, so it was “reasonable to exploit this idea for accounting purposes.”[2] These other projects included the modeling of ecological and weather systems as well as national economies. In fact, Mattissich’s concurrent work on national accounting systems was better received, and his book on the topic became a classic in its own right.[3] Mattessich’s attempts soon fell into obscurity because mainframe technology was not as powerful or as interactive as microprocessor-powered personal computer.

The computer systems of the mid-1960s were not conducive to the type of interactivity that would make spreadsheets so attractive in the 1980s. Computers were big, secluded, and attended to by a slew of programmer acolytes that religiously protected their technological and knowledge domains. They were the domain of the EDP department and removed from all but the highest management by procedures, receptionists, and security precautions.

Computers ran their programs in groups or “batches” of punched cards delivered to highly sequestered data processing centers and the results picked up some time later; sometimes hours, sometimes days. Batch processing was used primarily for payroll, accounts payables, and other accounting processes that could be done on a scheduled basis. The introduction of minicomputers using integrated circuits such as DEC’s PDP-8 meant more companies could afford to have computers, but they did not significantly change their accounting procedures or herald the use of Mattissich’s spreadsheet.

Although the earliest PCs were weaker than their bigger contemporaries, the mainframes, and even the minicomputers, they had several advantages that increased their usefulness. Their main advantage was immediacy; the microcomputer was characterized in part by its accessibility: it was small, relatively cheap, and available via a number of retail outlets. It used a keyboard for human input, a cathode ray monitor to view data, and a newly invented floppy disk for storage.

But just as important was the fact that it bypassed the traditional data processing organization that was constantly striving to keep up with new processing requests. One implication was that frustrated accountants would go out and buy their own computers and software packages over the objections or indifference of the EDP department. It also meant a new flexibility in terms of the speed and amount of information immediately available. Levy recounts the following comments from a Vice-President of Data Processing at Connecticut Mutual who eventually bought one of the earliest microcomputer spreadsheet programs to do his own numerical analyses:

-

DP always has more requests than it can handle. There are two kinds of backlog – the obvious one, of things requested, and a hidden one. People say, “I won’t ask for the information because I won’t get it anyway.” When those two guys designed VisiCalc, they opened up a whole new way. We realized that in three or four years, you might as well take your big minicomputer out on a boat and make an anchor out of it. With spreadsheets, a microcomputer gives you more power at a tenth the cost. Now people can do the calculations themselves, and they don’t have to deal with the bureaucracy.[4]

Despite the increasing processing power of the mainframes and minis, and new interactivity due to timesharing and the use of keyboards and cathode ray screens, the use of computerized spreadsheets never increased significantly until the introduction of the personal computer. It was only after the spreadsheet idea was rediscovered in the context of the microprocessing leap made in the next decade that Mattesich’s ideas would be acknowledged.

Notes

[1] Mattessich and Galassi credit assistants Tom Schneider and Paul Zitlau with the development of the first actual computerized spreadsheet based on an algebraic model written by Mattessich. It was reported in “The History of Spreadsheet: From Matrix Accounting to Budget Simulation and Computerization”, a paper presented at the 8th World Congress of Accounting Historians in Madrid, August 2000. See Mattessich, Richard, and Giuseppe Galassi. “History of the Spreadsheet: From Matrix Accounting to Budget Simulation and Computerization.” Accounting and History: A Selection of Papers Presented at the 8th World Congress of Accounting Historians: Madrid-Spain, 19–21 July 2000. Asociación Española de Contabilidad y Administración de Empresas, AECA, 2000.See also George J. Murphy’s “Mattessich, Richard V. (1922-),” in Michael Chatfield and Richard Vangermeersch, eds., The History of Accounting–An International Encyclopedia (New York: Garland Publishing Co., Inc, 1997): 405.

[2] This case of Richard Mattessich developing the first electronic spreadsheets has been made extensively including in “The History of Spreadsheet: From Matrix Accounting to Budget Simulation and Computerization”, a paper presented at the 8th World Congress of Accounting Historians in Madrid, August 2000 by Richard Mattessich and Giuseppe Galassi.

[3] Mattessich’s Accounting and Analytical Methods—Measurement and Projection of Income and Wealth in the Micro and Macro Economy (1964) was published by Irwin and was part of that movement towards national accounting systems. Mattessich, Richard. A later version was published as Accounting and Analytical Methods: Measurement and Projection of Income and Wealth in the Micro- and Macro-Economy. Scholars Book Company, 1977. It was mentioned in Chapter 3: Statistics: the Calculating Governmentality of my PhD dissertation, Symbolic Economies and the Politics of Global Cyberspaces (1993).

[4] Levy, S. (1989) “A Spreadsheet Way of Knowledge,” in Computers in the Human Context: Information Technology, Productivity, and People. Tom Forester (ed) Oxford, UK: Basil Blackwell.

© ALL RIGHTS RESERVED

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Anthony J. Pennings, PhD is Professor and Associate Chair of the Department of Technology and Society, State University of New York, Korea. Before joining SUNY, he taught at Hannam University in South Korea and from 2002-2012 was on the faculty of New York University. Previously, he taught at St. Edwards University in Austin, Texas, Marist College in New York, and Victoria University in New Zealand. He has also spent time as a Fellow at the East-West Center in Honolulu, Hawaii.

Tags: Excel > Google Sheets > Lotus 1-2-3 > Richard Mattessich > VisiCalc